Launching Gabber Cloud: Real-Time AI, Now Hosted

We’re excited to officially launch Gabber Cloud — a hosted orchestration and inference platform for building real-time, multimodal AI applications that can see, hear, and do.

Gabber started as an open-source project (github.com/gabber-dev/gabber), and in just a few weeks it’s grown to 1,000+ stars and hundreds of developers experimenting with building real-time agents, avatars, and assistants.

The feedback was consistent:

“This is awesome — but I don’t have a $3,000 GPU to run it.”

So we built Gabber Cloud.

What Gabber Is

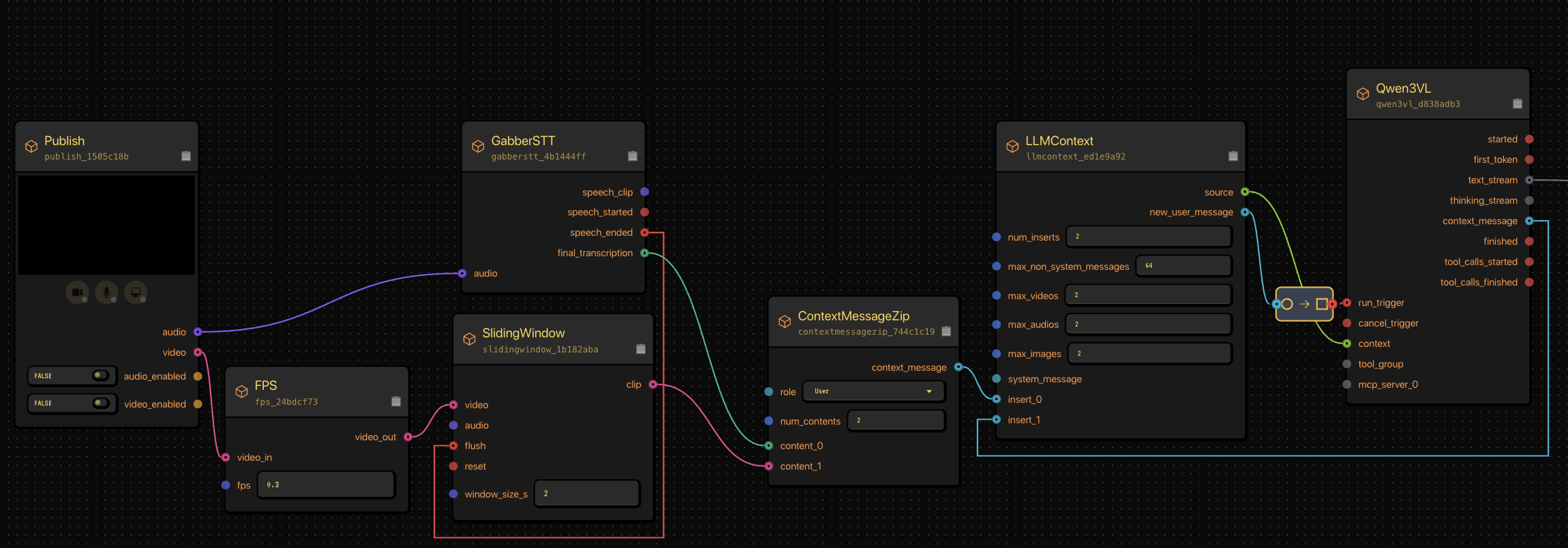

Gabber is a multi-modal orchestration engine — a system designed to handle live streams of audio, video, and text, and route them through AI models and tools in real time.

It’s what you need when you want to build an app that’s always listening, seeing, or responding, not waiting for a single prompt.

Examples:

- A vision agent that monitors your workspace and talks to you.

- A voice assistant that transcribes and responds instantly.

- A companion that speaks, sees, and acts continuously.

- A control agent that manipulates your screen or environment.

With Gabber, these applications run continuously, not as a series of discrete API calls. Multiple models can run in parallel — informing each other, triggering actions, and maintaining state across multiple data streams.

Why We Built It

Real-time AI requires two things that the current AI stack doesn’t provide:

- A special orchestration layer that can manage continuous streams of audio and video — not just text tokens.

- Inference that’s co-located with that orchestration, so every frame or sound can be processed with sub-second latency.

That’s what Gabber does.

Existing APIs are optimized for request/response interactions — great for chatbots, not for continuous systems. Gabber fills the gap between static LLM calls and real-time, multimodal AI.

What’s in Gabber Cloud

Gabber Cloud is the easiest way to build and deploy multimodal AI applications. It’s the same orchestration engine as open source — but fully hosted, optimized, and production-ready.

Key features:

- $1/hour all-in inference for speech, vision, and language models

- Built-in models: Orpheus TTS (voice), Parakeet STT (speech-to-text), Qwen3-VL (vision-language)

- Realtime orchestration across multiple input streams (audio, video, text)

- Tool calling + MCP integration for action-taking AIs

- Starter templates + sample apps to get you building fast

- Scale automatically, no GPU setup required

You can stream live media from your mic, camera, or screen into Gabber Cloud, process it through any combination of models, and trigger actions or generate output instantly.

What Makes Gabber Different

Most “AI workflow” tools today fall into one of two categories:

- Tools for generating media or artifacts (e.g. ComfyUI, Blender).

- Tools for handling business logic and events (the many agent frameworks released this year).

Gabber is neither.

Gabber’s graph represents the flow of real-time media — how live audio and video move through models and logic continuously. It’s a system built to run every second, not every event.

You can have four inference nodes running simultaneously — STT, vision, LLM reasoning, and TTS — all feeding back into each other in under a second.

That’s the foundation of real-time, embodied AI.

Why Cloud Matters

The open-source engine is flexible, but many developers told us the same thing:

“I love the concept, but I just want to build — not set up GPUs and infra.”

Gabber Cloud removes that barrier. It’s designed so you can start from a browser, run live multimodal inference in minutes, and scale from prototype to production without managing infrastructure.

You can also still run Gabber locally, connect your own models, or self-host. The core remains source-code available, under an n8n-style license that allows unlimited personal and internal use.

What’s Next

We’re already working on:

- New nodes and models (video understanding, emotion recognition, 3D environments)

- SDKs for Unity and ESP32

- More starter templates for vision, audio, and embodied use cases

- Improved dashboard, billing, and monitoring

Our goal is to make Gabber the easiest way to build continuous, multimodal AI applications — the ones that see, hear, and react in real time.

Get Started

- Explore gabber.dev

- Watch the launch video

- Read the Qwen3-VL real-time inference guide

- Leave a Star Gabber on GitHub

If you’re building something that runs in real time, Gabber Cloud is the missing piece of your stack.

Gabber Cloud — Real-time AI, hosted and ready to build on.