How To Run Real-Time Video Inference On Qwen3 VL and Omni Model

Qwen3 is revolutionizing real-time video analysis. Google pioneered live video inference—feeding video streams in real-time for instant AI analysis—but the ecosystem of production-ready alternatives has remained surprisingly limited.

While Perceptron, Moondream, 12Labs, and Reka exist as contenders, they focus more on batch video analysis with high precision rather than true real-time streaming inference.

Qwen 2.5 introduced an Omni model, but it lacked the intelligence and accessible hosting infrastructure needed for production deployments.

Qwen3 changes everything. Combined with Gabber's visual graph builder, you can now build real-time video AI applications in minutes, not weeks.

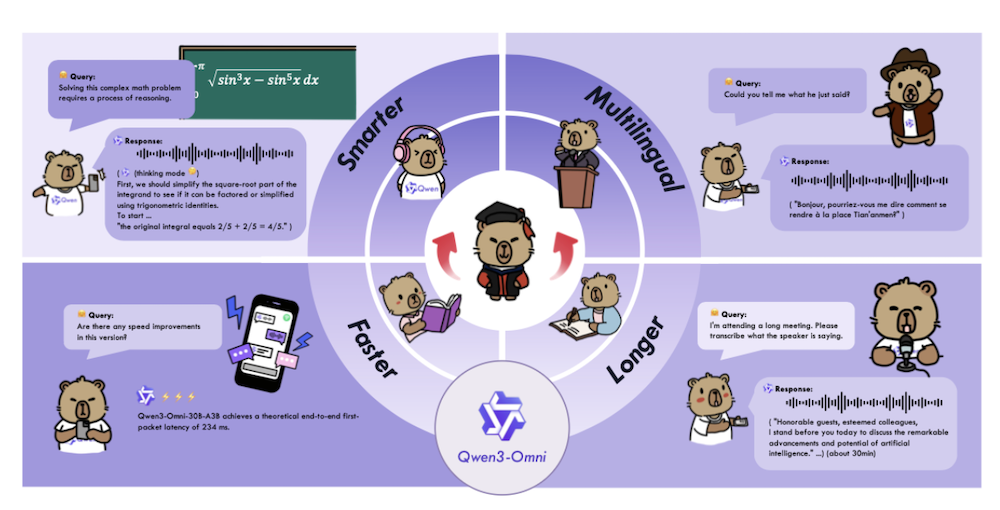

Why The Release Of Alibaba's Qwen3 VL and Omni Model Is A Big Deal

Qwen3 represents a significant leap forward in multimodal AI capabilities. Here's what makes it special:

Real-Time Video Processing

Unlike most vision-language models that process static images or batch video frames, Qwen3 VL can handle live video streams with minimal latency. This opens up entirely new use cases for AI-powered applications.

Enhanced Multimodal Understanding

Qwen3 doesn't just process video—it understands context, emotions, actions, and complex scene dynamics in real-time. This makes it perfect for applications requiring immediate response to visual input.

Production-Ready Performance

While Qwen 2.5 Omni existed, it lacked the intelligence and hosting infrastructure needed for real-world applications. Qwen3 addresses both these gaps.

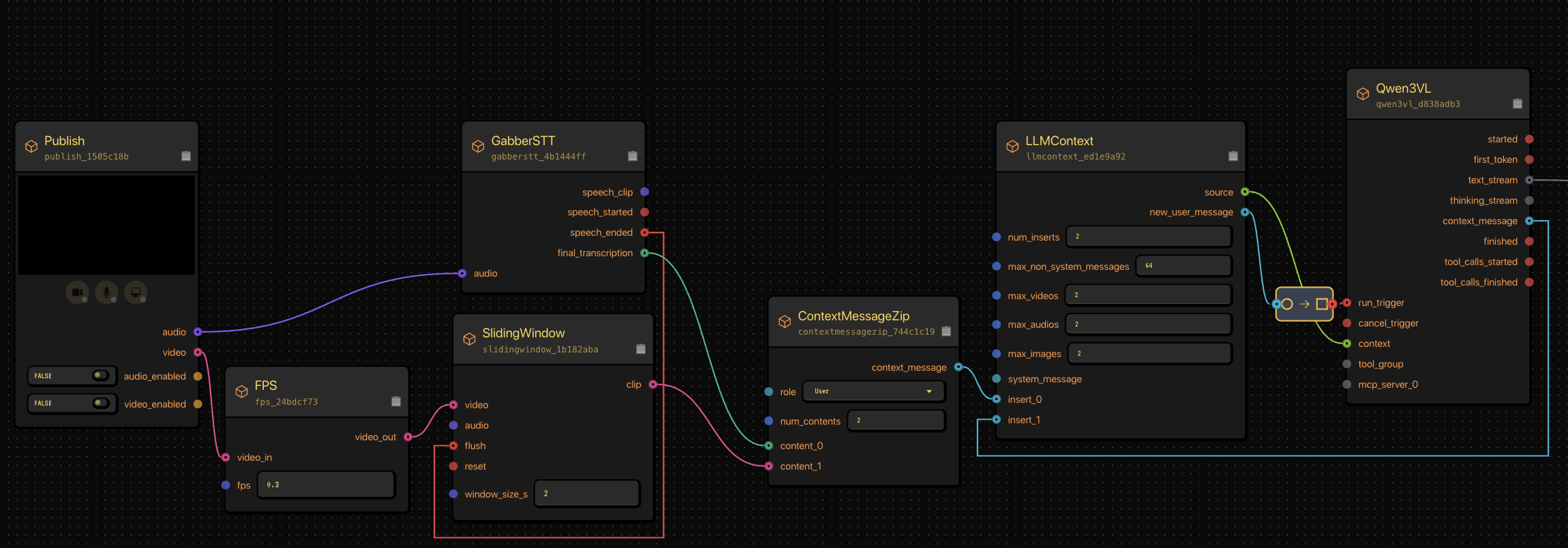

How To Build Real-Time Video AI Apps With Qwen3 Using Gabber's Graph Builder

Gabber makes it incredibly easy to integrate Qwen3 into your real-time applications using our visual graph builder—no complex infrastructure required. Here's the complete workflow:

Step 1: Sign Up and Access the Graph Builder

- Create your free account at app.gabber.dev

- Choose your starting point:

- Browse pre-built Examples for common video AI use cases

- Start from scratch with Create New App

Step 2: Build Your Video Processing Graph (No Code Required)

The Gabber graph builder uses a simple node-based architecture. Here's how to set up Qwen3 video inference:

1. Publish Node - Capture Media Input

- Accepts video/audio streams from webcam, screen share, or uploaded files

- Handles WebRTC connections automatically

- Supports multiple simultaneous media inputs

2. Context Node - Format Your Data

- Structures video frames and audio for the AI model

- Maintains conversation history

- Adds system prompts and instructions

3. Qwen3 LLM Node - Process & Analyze

- Select Qwen3 VL for vision tasks or Qwen3 Omni for multimodal

- Configure parameters (temperature, max tokens, etc.)

- Get instant responses based on video content

4. Output Node - Deliver Results

- Stream responses back to your application in real-time

- Support for both audio and text outputs

- Low-latency WebRTC delivery

Step 3: Test and Deploy Instantly

Cost: Just $1/hour for video processing - pay only for what you use with no upfront costs.

- Test in browser: Click "Run" to test with your webcam immediately

- Get your API endpoint: Grab your unique endpoint URL

- Deploy anywhere: Use our React SDK or REST API in your application

Why Use Gabber for Qwen3 Video Inference?

1. Visual Graph Builder - No Complex Code

Build complete video AI pipelines by connecting nodes visually. Perfect for both developers and non-technical users.

2. Sub-Second Latency

Our optimized WebRTC infrastructure ensures Qwen3 responses stream back in real-time, making it viable for interactive applications.

3. Production Scale Infrastructure

Handle thousands of concurrent video streams. Gabber's enterprise-grade infrastructure scales automatically.

4. Extremely Cost Effective

At just $1/hour for video processing, Qwen3 on Gabber is 10 times cheaper than building your own infrastructure. Pay only for actual usage.

Real-World Qwen3 Video AI Use Cases

Live Video Analysis Applications

- Security & Surveillance: Real-time threat detection in video feeds with instant alerts

- Quality Control: Automated inspection of manufacturing processes and defect detection

- Sports Analytics: Live game analysis, player tracking, and performance metrics

- Healthcare: Patient monitoring and real-time medical imaging analysis

Interactive AI Applications

- Video Chatbots: AI companions that can see users and respond contextually

- Virtual Assistants: Screen-aware AI that helps with computer tasks

- Educational Tools: Real-time feedback on presentations, form, or technique

- Accessibility: Live description of visual content for visually impaired users

Content Creation & Media

- Live Streaming: Real-time captioning, moderation, and highlight detection

- Social Media: Automated content tagging, moderation, and trend detection

- Gaming: Dynamic difficulty adjustment and player behavior analysis

- Video Production: Automated editing suggestions and scene detection

Code Implementation: React Component for Qwen3 Video Inference

Once you've built your graph in the Gabber builder, integrating it into your React application is straightforward. Here's a complete component based on our production implementation:

Install Dependencies

npm install @gabber/client-react

Basic React Component

'use client'; import React, { useCallback, useRef, useState, useEffect } from 'react'; import { EngineProvider, useEngine, LocalVideoTrack, RemoteVideoTrack, RemoteAudioTrack, } from '@gabber/client-react'; function VideoAIComponent({ appId }) { const { connect, disconnect, publishToNode, getLocalTrack, subscribeToNode, connectionState } = useEngine(); const [localVideoTrack, setLocalVideoTrack] = useState(null); const [remoteVideoTrack, setRemoteVideoTrack] = useState(null); const [remoteAudioTrack, setRemoteAudioTrack] = useState(null); const [isConnected, setIsConnected] = useState(false); const localVideoRef = useRef(null); const remoteVideoRef = useRef(null); const audioRef = useRef(null); // Connect to your Gabber app const handleConnect = async () => { const response = await fetch('https://api.gabber.dev/v1/app/run', { method: 'POST', headers: { 'Content-Type': 'application/json', 'Authorization': `Bearer ${process.env.GABBER_API_KEY}`, }, body: JSON.stringify({ app_id: appId }), }); const { connection_details } = await response.json(); await connect(connection_details); setIsConnected(true); }; // Publish video to the Publish node const publishVideo = async () => { const track = await getLocalTrack({ type: 'webcam' }); await publishToNode({ localTrack: track, publishNodeId: 'publish_node_id' // From your graph }); setLocalVideoTrack(track); if (localVideoRef.current) { track.attachToElement(localVideoRef.current); } }; // Subscribe to Output node for AI responses const subscribeToOutput = async () => { const sub = await subscribeToNode({ outputOrPublishNodeId: 'output_node_id' // From your graph }); sub.waitForVideoTrack().then((track) => { setRemoteVideoTrack(track); if (remoteVideoRef.current) { track.attachToElement(remoteVideoRef.current); } }); sub.waitForAudioTrack().then((track) => { setRemoteAudioTrack(track); if (audioRef.current) { track.attachToElement(audioRef.current); audioRef.current.play(); } }); }; // Auto-connect and setup when component mounts useEffect(() => { if (isConnected) { publishVideo(); subscribeToOutput(); } }, [isConnected]); return ( <div className="video-ai-container"> <div className="video-grid"> {/* Your video feed */} <div className="local-video"> <video ref={localVideoRef} autoPlay muted playsInline /> <label>Your Camera</label> </div> {/* AI's video response (if applicable) */} <div className="remote-video"> <video ref={remoteVideoRef} autoPlay playsInline /> <label>AI Response</label> </div> </div> <button onClick={handleConnect} disabled={isConnected}> {isConnected ? 'Connected' : 'Connect to Qwen3 AI'} </button> {/* Hidden audio element for AI voice responses */} <audio ref={audioRef} /> </div> ); } export default function App() { return ( <EngineProvider> <VideoAIComponent appId="your-app-id-from-gabber" /> </EngineProvider> ); }

Key Integration Points

- App ID: Get this from your Gabber graph builder after creating your app

- Node IDs: Each Publish and Output node has a unique ID visible in the graph builder

- Authentication: Generate API keys in your Gabber dashboard

- WebRTC Streams: Fully managed by our SDK - no manual WebRTC configuration needed

Getting Started: Building Your First Qwen3 Video AI App

Prerequisites

- A web browser (no coding required for graph building!)

- Optional: Node.js 16+ for React integration

- A Gabber account - Sign up free at app.gabber.dev

5-Minute Quick Start Guide

Step 1: Create Your Graph (2 minutes)

- Log into app.gabber.dev

- Click "Learn" → "Conversation w/ Video" or "Create New App"

- Drag and connect: Publish Node → Context Node → Qwen3VL Node → Output Node

- Configure the Qwen3 node:

- Model: Select Qwen3VL

- System prompt: "You are a helpful AI that can see and understand video"

Step 2: Test In Browser (1 minute)

- Click the "Run" button in the graph builder

- Allow camera permissions when prompted

- Start talking and showing things to your camera

- Watch Qwen3 analyze and respond in real-time!

Step 3: Deploy to Your App (2 minutes)

- Copy your App ID from the graph builder

- Install the React SDK:

npm install @gabber/client-react - Drop in our component code (shown above)

- Replace appId and publishNodeId/outputNodeId with your values

That's it! You now have production-ready real-time video AI running for just $1/hour.

Qwen3 Performance Benchmarks: Real-Time Video AI Comparison

Why Qwen3 on Gabber is the Best Choice for Real-Time Video AI

- 10 Times More Affordable: $1/hour vs $3-9/hour for competitors

- Fastest Setup: Visual graph builder means prototpying -> productionizing fast

- True Real-Time: WebRTC-based streaming with sub-200ms latency

- Multimodal Support: Qwen3 Omni processes both video AND audio simultaneously

- Production Ready: Enterprise-grade scalability from day one

Frequently Asked Questions (FAQ)

What makes Qwen3 different from GPT-4 Vision or Claude?

Qwen3 is designed specifically for real-time video streaming, not just static images. While GPT-4 Vision and Claude excel at analyzing single images or short video clips, Qwen3 can process live video feeds with audio in real-time, making it perfect for interactive applications.

How much does it cost to run Qwen3 on Gabber?

Just $1 per hour of video processing. You only pay for active usage - no monthly fees, no infrastructure costs. This is 3-10 times cheaper than competitors like Google's Live API or building your own infrastructure.

Do I need to know how to code to use Gabber's graph builder?

No! The visual graph builder lets you create complete AI video processing pipelines by dragging and connecting nodes. However, if you want to integrate it into your application, basic React knowledge helps (we provide complete code examples).

Can I use my own Qwen3 model or must I use Gabber's hosted version?

Gabber provides fully hosted Qwen3 VL and Omni models optimized for low latency. We handle all the infrastructure, scaling, and optimization so you can focus on building your application. We do have an open source version of Gabber that allows you to bring your own model.

What's the difference between Qwen3 VL and Qwen3 Omni?

- Qwen3 VL: Vision-language model for analyzing video and images

- Qwen3 Omni: Multimodal model that processes video, images, AND audio together for richer understanding

How do I get started with Qwen3 video inference?

- Sign up at app.gabber.dev

- Browse examples or create a new graph

- Connect Publish → Context → Qwen3 LLM → Output nodes

- Click "Run" to test with your webcam immediately

Conclusion: Build Real-Time Video AI Apps in Minutes, Not Months

Qwen3 represents a major breakthrough in real-time multimodal AI. Combined with Gabber's visual graph builder and $1/hour pricing, you can now build production-ready video AI applications without managing complex infrastructure, spending thousands on hardware, or hiring ML engineers.

Ready to Build with Qwen3?

Whether you're creating:

- 🎥 Real-time video analysis tools

- 🤖 Interactive AI companions that can see users

- 🔒 Security and surveillance systems

- 🎮 Gaming applications with visual AI

- 📱 Video chatbots and virtual assistants

- 💻 Computer Use Agents

Gabber makes it simple.

👉 Sign up free at app.gabber.dev and build your first realtime Qwen3 video processing AI app in 5 minutes.

Additional Resources

Tags: Qwen3 VL, Qwen3 Omni, real-time video AI, multimodal AI, video inference, vision language model, AI video analysis, WebRTC video streaming, real-time video processing, AI graph builder, no-code AI, video chatbot, live video analysis, Alibaba Qwen, vision AI