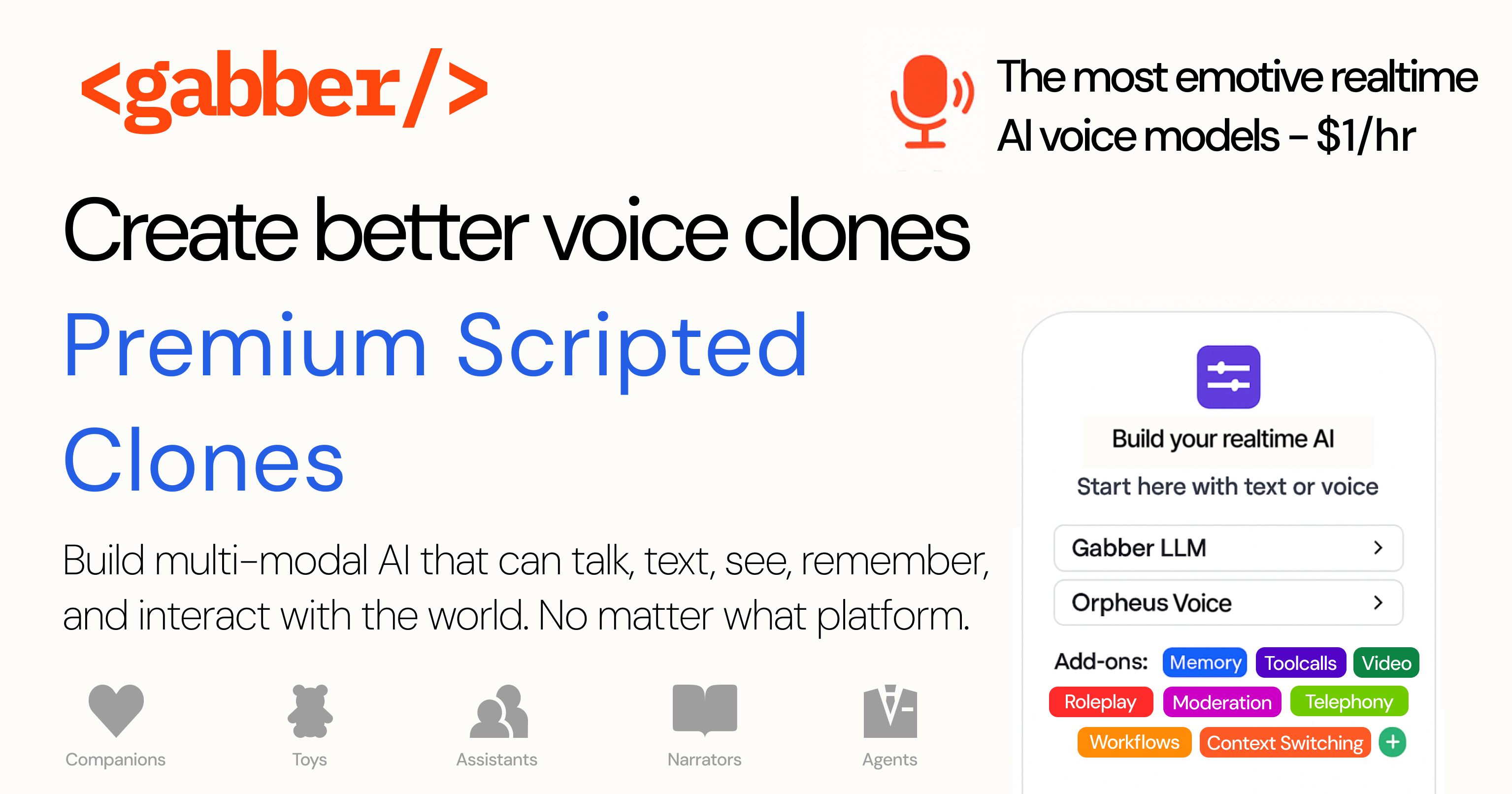

The Most Realistic Emotive AI Voice - Clones That Can Laugh, Cry, Moan, and Change Tone

At Gabber, expresssive, premium $1/hr AI voice, including cloning, isn't just a feature—it's a foundational part of how we help you build expressive, believable AI personas. Gabber isn't just another voice platform. We're building an end-to-end backend for your AI personas, and expressive voice is table stakes for any AI persona you expect people to verbally engage with beyond a 5 minute phone call to process a refund or insurance claim.

Whether you're building companions, dungeon masters, smart toys, narrators, NPCs, or customer-facing agents, your clone's emotional realism matters, and I'm not just saying that because I'm about to write a blog post about it.

Flat, unexpressive voice is a complaint I hear all the time from people looking to switch from Cartesia, Play, Elevenlabs.

Shoot, even the platforms that are apparently "expressive" like Rime and Resemble's Chatterbox are only "good" when compared to Cartesia or Elevenlabs. Compare them to a real human which, you know, is the baseline, and they still sound bad.

That's why we've invested heavily in HD cloning: expressive, emotive, high-fidelity voice models built from your own recordings — no celebrity data, no guesswork, no cheap tricks.

In this post, we'll walk through the two ways you can provide source data, how they differ, and why a little more effort upfront can result in dramatically better output.

Method 1: Freestyle Audio (20–30 Minutes of Casual Speech)

This one sounds way cooler than it is, but it's still cool and really simple: provide 20–30 minutes of audio of you speaking. This can be anything—a Zoom call, you reading Brave New World, or a bunch of loosely stitched recordings (we've done all of these).

The goal here is to provide enough clean data that our system can learn how you sound.

Our system can even:

- Parse podcasts into individual speakers

- Auto-transcribe and auto-label the speech for training

This worked well, but there was an obvious catch. Freestyle audio isn't structured and it's almost always derived from one or two recordings, meaning it lacks range. You feel this in the clone.

Limitations of "Freestyle" Audio

- No emotional guidance - we have to guess whether a line was meant to be nervous, sarcastic, or angry.

- No emote tags - like laugh, sigh, or moan to teach the model how to express specific vocalizations.

- Transcriptions are noisy - even small errors in punctuation or speaker detection introduce weirdness into the TTS clone.

- Limited intonation variety - most podcasts use a fairly flat delivery, so your clone learns that tone and nothing else.

While this is still much better than a one-shot clone—it's a true LoRA finetune—it lacks a full emotional range for expressive contexts like roleplaying or storytelling.

But what if you want your AI to sound real? That's where Method 2 comes in.

Method 2: Scripted Voice Capture with Emotion Tags

This method takes a bit more effort upfront, but the results are ridiculous.

You read from a 20-minute script we provide, where:

- Every line is perfectly transcribed

- We include explicit emotion cues, e.g.,

- Tags like laugh, cry, sigh, moan

- Segment-level tags: (nervous), (suspenseful)

- You vary your tone and delivery—deliberately showcasing range

Example Lines and Emotions

You went to the concert without me? sigh We had talked about going together for months!

Do you hear that? sniffle The wind hasn't stopped and I'm freezing.

I can feel my heart racing sigh. What if something goes wrong during surgery?

I keep checking my phone for a callback about the job sigh. Why haven't they called yet?

Why it works so well:

- The transcription matches perfectly (no ASR noise)

- The model sees rich emotional examples

- We can anchor specific tags to audio features

- We create a much more emotionally intelligent voice model

Under the Hood: The LoRA Finetune

In both cases, Gabber creates a LoRA adapter — a small, specialized fine-tune on top of our base voice model that makes your voice clone sound like you. But the quality of the data that feeds that LoRA is everything.

Think of it like this:

One-shot (Cartesia/ElevenLabs)

Rough match

Flat

Inconsistent

Prone to errors

Premium Clone – Freestyle Audio

Good match

Moderate

Mixed

Very Good in narrow range

Premium Clone – Scripted + Tagged

Perfect match

Wide & expressive

Varied

Perfect across emotions

Want to dive deeper into how LoRA works?

Check out our LoRA breakdown →

TL;DR — The Better The Data, The Better The Clone

Your voice clone will only ever be as good as the data provided. And while our models are best-in-class, you get exponentially better results when you guide the model with the right input data, tags, emotions, and vocal range.

- Want to sound alive? Take the time to read the script. Your users will feel the difference.

As of early June 2025, we're in the process of productionizing the system. If you're impatient, demand access by joining the Discord or emailing [email protected]. I will respond quickly and we can get you started. Bonus points if you send a video of yourself doing the emotes — it's hilarious.

$39 per clone. $1/hour to use. Unlimited personality.