How To Stay Compliant With California's New Senate Bill No. 243 AI Chatbot Compliance And Safety Laws

Headlines like this will become more common as AI usage scales among consumers — but it’s not just the government that cares.

You can’t get approved by your bank if you aren’t compliant with safety laws.

Having started by offering infrastructure for consumer AI chatbots, we at Gabber solved the very problem California Senate Bill No. 243 is now regulating.

The system we built keeps even the most “exciting” AI chatbot use cases compliant with banks (and now governments).

And it’s not as simple as moderating certain words or topics.

The Four Factors That Make Compliance Work

When we set out to build moderation infrastructure for conversational AI, we realized that compliance required more than a filter. It had to be adaptive, fast, and context-aware.

There are four primary factors we had to get right:

- Specific word restrictions

Detect and block predefined terms that indicate disallowed content or unsafe intent. - Topical restrictions

Recognize broader off-limits categories (e.g., explicit themes, medical advice) and enforce consistent boundaries. - Prompt-level guardrails

Inject “soft” constraints into the system prompt that gently steer the model away from risky territory before it happens. - Zero-added latency

Achieve all of this without slowing down the real-time experience users expect from voice and chat applications.

These were all table stakes for creating a system that’s compliant with banks and government — but still fast and delightful for end users.

How We Did It

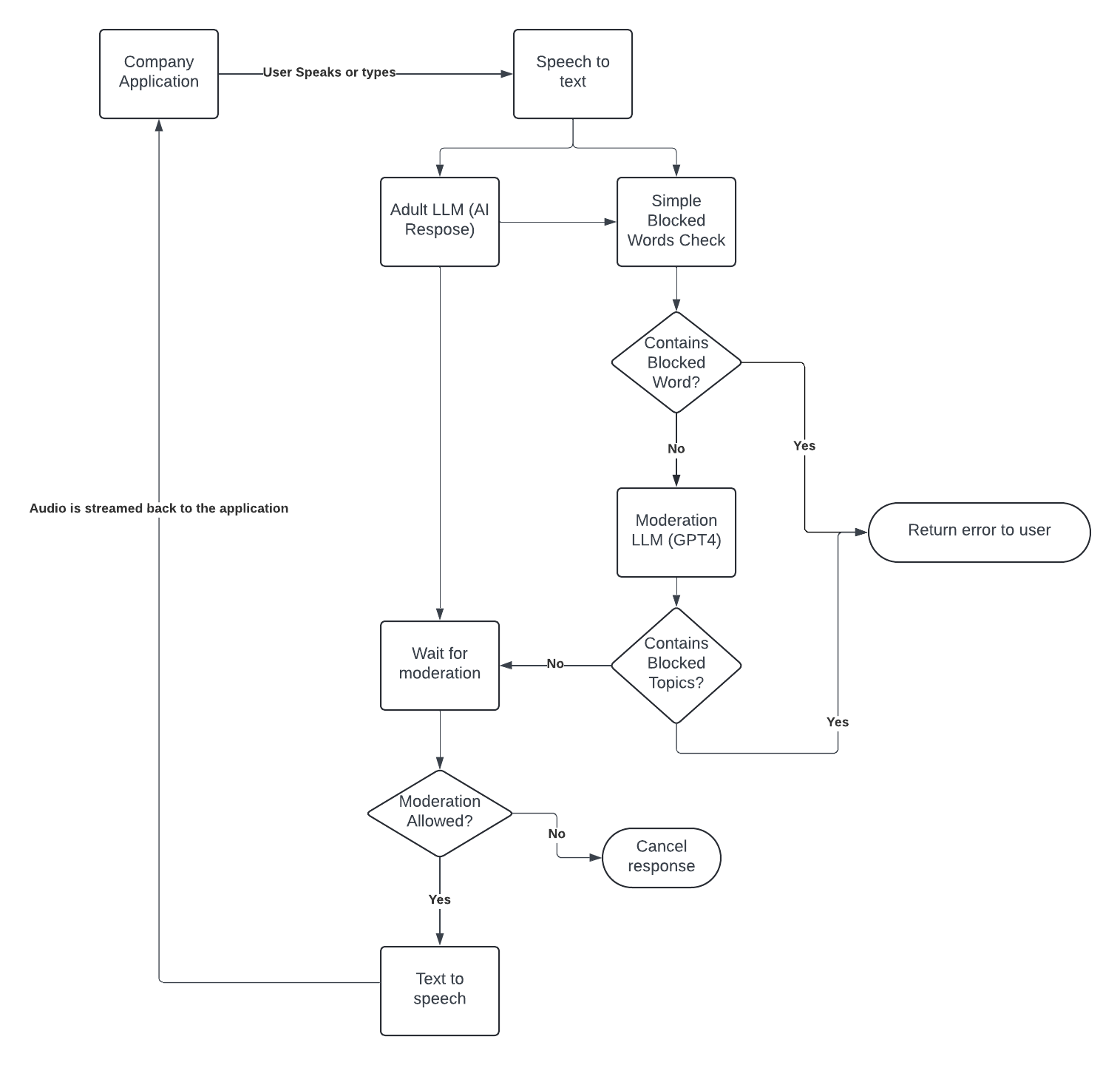

At Gabber, we provide a moderation sidecar that runs alongside specific LLM options.

Every message — both from the user and the model — passes through this layer before being displayed. Below we show one of our earlier implementations.

This system monitors for:

- Disallowed words

- Off-limits topics

- Behavioral edge cases (like attempts to re-prompt or jailbreak)

If a violation occurs, we prevent the LLM from responding and raise a structured error.

That error can be caught by your app to show a safe fallback message or redirect the conversation.

We also support prompt-level protections. Our partners often inject custom rules into the prompt itself — for example:

“If race comes up, refrain from answering and ask the user to talk about something else.”

This dual-layer system keeps the AI within reasonable and compliant behavior bands.

Per our contract structure, Gabber provides:

- The infrastructure to run and scale your LLMs,

- The moderation system that enforces policy,

- And reasonable guardrails to keep things on track.

We can’t control everything — users, prompts, or external LLM logic — but the system works reliably under normal conditions.

And we continuously update our moderation tools based on customer feedback and regulatory changes.

Why It Matters

California’s new law underscores what many in AI infrastructure already know:

Moderation isn’t optional. It’s part of the product.

Developers that invest in this early — at the framework level — won’t just be compliant with the new laws; they’ll be trusted by partners, regulators, and users alike.

TL;DR

- We provide the moderation tools that monitor, block, and report unsafe content.

- You control the LLM choice, prompting style, and user input.

- Together, we ensure the system stays safe, compliant, and performant.

If you need guidance on moderation or want to integrate our compliance-ready system into your AI stack, reach out to us or join the Discord — we’re happy to help.