How I Built A Multi-Participant Conversational AI With Multiple Humans and Multiple AI

Ever since starting to build in the realtime voice space, the question of 2+ humans talking to 1 AI, or 2+ AI talking to one human has come up. Recently someone asked me about a many-to-many AI-to-human system.

There's clearly something here.

This problem has always been difficult for multiple reasons:

- How do you ingest multiple media streams into the system?

- How does the AI actually process these streams?

These problems were previously not possible to solve, or solving them would have required a bespoke system that could only tenuously be justified for the investment.

But that changed recently. Gabber exists now. LLMs have gotten better.

My hands were tied. I had to build it.

What Is A Multi-Participant Conversational AI Voice Bot?

Great question.

Since the beginning of my time building AI voice agents, I've probably heard this more than any other:

- "Can two humans talk to it?"

- "Can two AIs talk?"

I've said "no" to these a bunch of times, and it wasn't a "No, but maybe soon." It was:

No, and I'm not really sure when that'll be feasible for production use cases.

(i.e. don't hold your breath).

But people clearly want it. They want to move beyond one-on-one conversations with AI:

- Couples therapy

- Mini-games

- Group chats with friends

AI has previously been constrained to an AI and a human taking turns. Now it's not.

Bring in multiple AI. Have 2 or even multiple humans talking to a single or multiple AI.

And maybe you don't want the AI to always respond. Turn-taking is odd in a real conversation. The AI should only speak when it should speak---not just whenever anyone says something.

Building A Conversational AI With Multiple Human and AI Participants

Building this thing was initially a question of avoiding over-engineering and not reinventing the wheel.

As mentioned, the biggest challenges were at the LLM layer and the ingestion layer.

LLM Labeling

For the LLM, I didn't do anything wild.

- I tried labeling conversations with the name of the speaker.

- Checked if it got confused.

- Using a "smart-ish" LLM like GPT-4.1-mini, it was a non-issue.

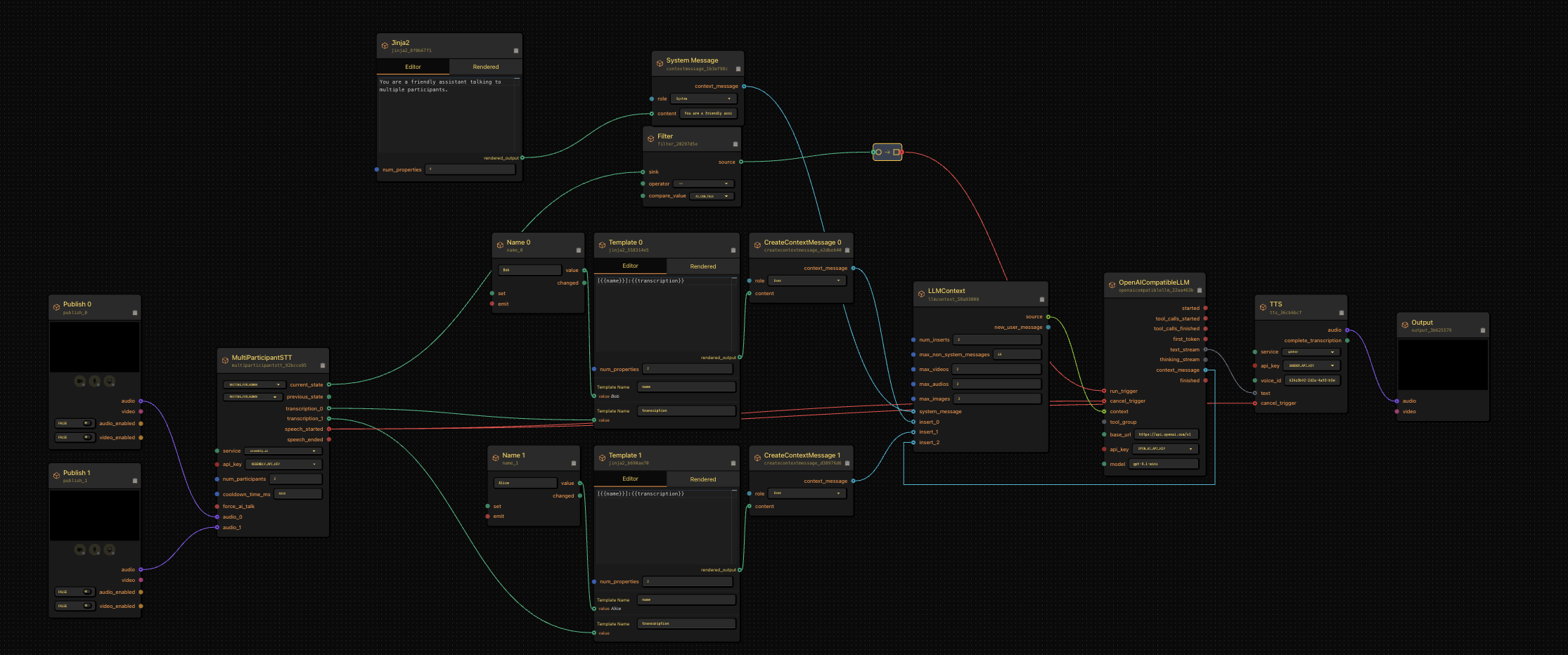

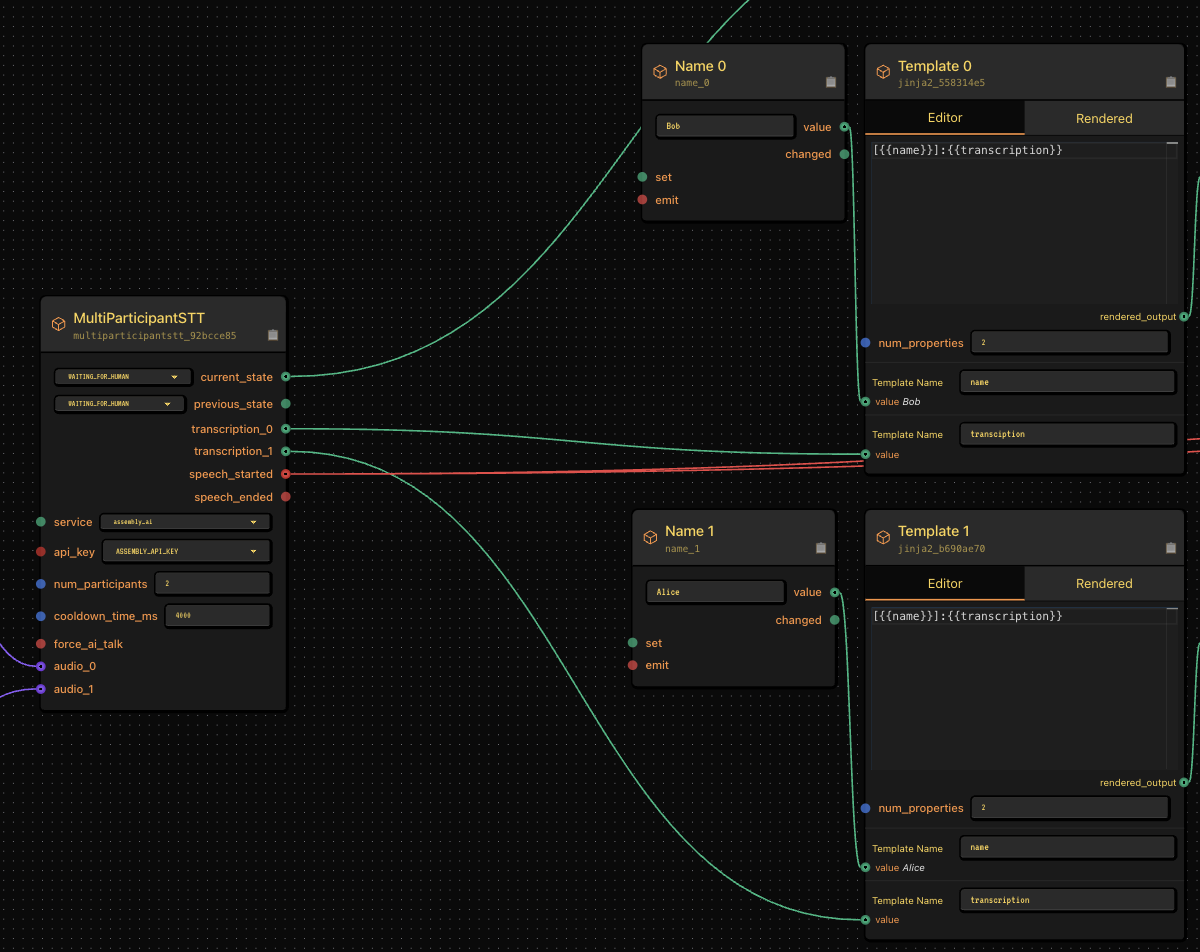

For labeling, I used Jinja to append the speaker's name to the text output from the multi-participant Speech-to-Text node.

The system prompt just worked --- which I didn't necessarily expect, but it was a nice bonus.

Here's what the conversation actually looked like:

Bob: "AI, if you were a sandwich, what kind of sandwich would you be?"

AI: "I'd be a BLT - because I'm always trying to bring home the bacon with my language processing!"

Alice: "No way, you'd definitely be a grilled cheese. Simple, comforting, and you melt under pressure."

Bringing In Two Audio Sources Into A Conversational AI System

This was trickier.

I tinkered with a handful of approaches:

- Diarization

- Separate STT streams

- Etc.

Ultimately, I decided on two separate publish nodes and a merge node with logic to:

- Detect who was talking

- Pipe the text to the appropriate Jinja labeling node

Putting It All Together

The transcript from the multi-participant node gets:

- Fed into the labeling node

- Labeled text goes into the LLM

I had a run trigger on my LLM to only generate responses when there weren't any humans talking.

This is the simplest form of end-of-turn detection, but you can imagine something more advanced:

- Another LLM running to determine when the AI should respond

- Context + personality-driven response timing

How You Can Use This Multi-Participant AI System

I've already heard great use cases for this multi-human, multi-AI conversational system. The best part?

You don't need any new building blocks beyond the ones I mentioned here:

- A little Jinja templating

- A little logic on the multi-participant STT side

Next Steps

If you want to build something like this, you've got options:

- We are a code-available project → clone the repo and start building.

- Or, join the waitlist for our cloud product (launching in the next 1-2 months).

Thanks for reading, and bug me on Twitter or Discord if you have questions.

Links

- GitHub: gabber-dev/gabber

- Twitter: @jackndwyer\

- Discord: Join here