Give your LLM the ability to call external functions with Gabber's bolt-on tool calling

Introduction

AI-powered interactions are evolving beyond simple conversations. It's not enough to just talk to your LLM, you need to be able to make it do things. Gabber’s tool calling feature enables your LLM, and the AI personas you create with it, to act on behalf of users, execute tasks, and integrate with external services—all in parallel while the conversation is happening and fully compatible with any LLM (Large Language Model).

What is Tool Calling?

Tool calling allows AI to interact with third-party services, run actions concurrently, and update conversations based on real-world results. Gabber makes it simple to add tool calling capabilities to any LLM, transforming passive chatbots into active, multi-functional assistants.

Key Benefits of Gabber’s Tool Calling:

- Parallel Execution: Run multiple tools simultaneously for faster results.

- Plug-and-Play: Works with any LLM without complex configurations.

- API-Driven: Seamlessly connect with third-party services.

- Secure Integration: Operates within Gabber’s usage token system for safe operations.

Example Tool Calls in Action:

- Image Generation: Request AI-generated images from third-party models in real-time.

- Data Collection: Gather live updates from web services or databases.

- Customer Support: Interface with ticketing systems to resolve issues automatically.

- Payment Processing: Securely execute transactions using connected payment APIs.

- Advertising Campaigns: Trigger ads or marketing workflows within active chat threads.

Seamless Integration Process

Step 1: Connect to Your LLM

Easily add Gabber’s tool calling layer to any existing LLM.

const completion = await openai.chat.completions.create({ model: "<your-LLM>", messages: [{ role: "user", content: "Hello" }], tools: ["account_number"] });

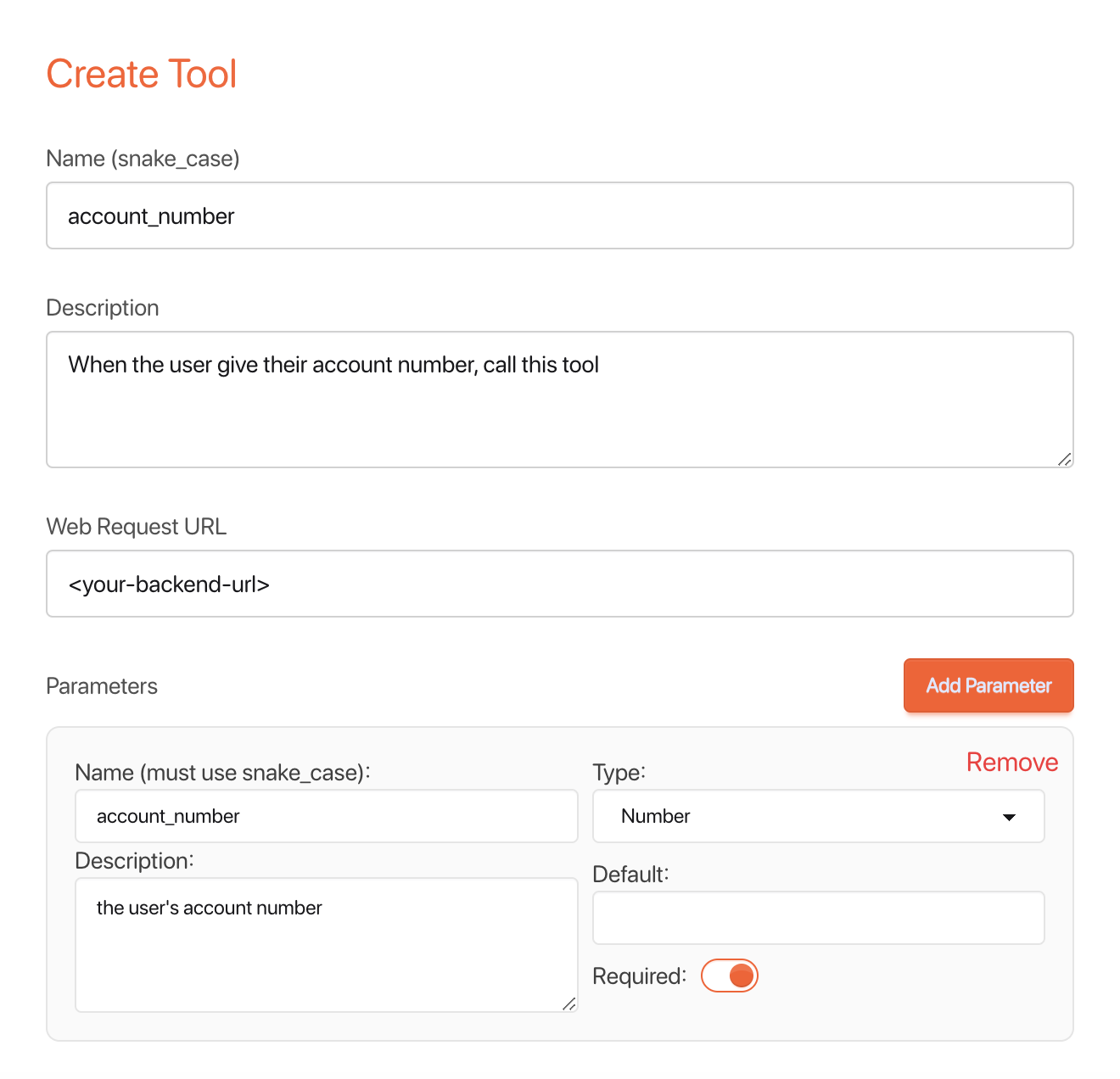

Step 2: Define Tool Call Functions

Create your tool call in Gabber and assign an API endpoint + any parameters you need.

{ "name": "account_number", "description": "When user provides account number.", "url": "<your-api-endpoint>", "parameters": { "name": "account_number", } }

OR

Step 3: That's it.

You Now Have A Fully Functioning, Parallel Execution Tool-calling LLM running alongside your conversation

Real-World Use Cases

1. AI Customer Support Agents:

- Real-Time Ticketing: Log issues into support platforms.

- FAQ Handling: Pull from knowledge bases instantly.

- Payment Collection: Process refunds or orders seamlessly.

2. Marketing and Sales Assistants:

- Advertising Triggers: Launch campaigns directly within chat interactions.

- Lead Qualification: Collect user information and update CRM records.

3. Creative AI Tools:

- On-Demand Image Creation: Generate and display custom images during conversations.

- Real-Time Content Suggestions: Provide recommendations based on live trends.

Why Choose Gabber for Tool Calling?

- Fast and Scalable: Parallel execution reduces delays.

- Universal Compatibility: Simple addition to any LLM model.

- Secure and Controlled: Managed through Gabber's usage tokens.

- Highly Versatile: Supports a wide range of tool integrations.

Conclusion

Gabber’s tool calling feature unlocks new possibilities for AI personas—moving from static responses to dynamic, action-oriented agents. By enabling AI to run tasks, collect data, and even make transactions, developers can create deeply interactive and valuable user experiences. Whether for customer support, creative tasks, or business operations, Gabber’s tool calling empowers your AI to do more—faster and smarter.

Here's a quick example fortune teller blog post and app: Building an AI Psychic Fortune Telling App With Code Samples Code: https://github.com/gabber-dev/gabber-fortune-teller