Building an AI Teacher App With Memory Using Gabber: A (Somewhat) Technical Guide

As AI continues to change literally everything, education seems to be taking a nosedive. There are Claude kids, TikTok kids, probably still Pokemon kids (me).

But this doesn't have to spell the end of education. There are, like the internet in the 90s, chances to make education more engaging and personalized.

That's what we're doing here today.

What We're Building

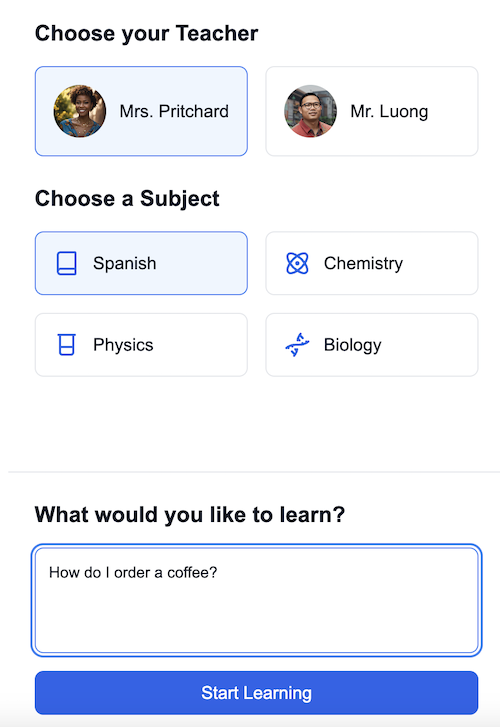

Our sample app is an AI teacher that allows users to:

- Select a Persona: Choose from different teaching personas powered by Gabber's Persona Engine.

- Pick a Subject: Select one of four available subjects: Spanish, Chemistry, Physics, or Biology.

- Ask an Initial Question: Use a text box to submit a question.

- Get Personalized Lessons: Gabber's LLM generates a context-aware response based on the selected persona and subject.

- Engage in Live Chat: Transition into a live chat interface for real-time teaching powered by Gabber's SDK.

This app will be entirely contained in a single page with no infrastructure beyond Gabber and whatever you choose to host it on.

Things We Could Add But Won't

- Stripe Payments: We're not going to be charging for this.

- Google OAuth: Not going to doing auth, but could look at https://github.com/gabber-dev/example-app-rizz-ai to see how to do it.

- User Management: Track usage and billing specific to each user.

- Long Term Memory: Is what it sounds like, available in Gabber but not in this app.

- Cross-platform: We're not going to be using this, but we could build one persona that engages across multiple platforms and maintains context across sessions.

- Multi-persona: Multiple AI teachers, each with their own persona and subject.

- Tool-calling: Have a persona that can call tools to make things happen triggered by the conversation.

Why Build This App?

Educational apps like this AI teacher are valuable for developers and end-users because they:

- Personalize Learning: Tailor responses and teaching styles to individual needs using personas.

- Enhance Engagement: Enable real-time, multimodal interactions via text and voice.

- Simplify Development: Gabber's API and SDK reduce complexity, so you can focus on building unique features.

- Showcase Versatility: Demonstrate how Gabber's modular stack supports diverse use cases, from education to customer support.

Core Technologies

This app leverages the following Gabber capabilities:

- Persona Engine: Enables the selection of dynamic, customizable personas tailored to specific teaching styles.

- Real-Time AI Voice and Text: Supports seamless multimodal communication for engaging Q&A sessions.

- Gabber LLM: Processes user queries and generates context-aware summaries.

- Next.js SDK: Simplifies frontend integration for handling user sessions, API calls, and live chat functionality.

Step-by-Step Development Guide

1. Setting Up The Backend

Every Gabber interaction starts with a usage token, ensuring that sessions are securely tied to a unique user. This is effectively a wrapper around the API key. We'll set this up as our entire backend:

"use server" import axios from "axios"; export const generateUserToken = async () => { const response = await axios.post('https://app.gabber.dev/api/v1/usage/token', { human_id: crypto.randomUUID(), limits: [ { type: 'conversational_seconds', value: 500 }, { type: 'voice_synthesis_seconds', value: 1000 } ] }, { headers: { 'X-api-key': \${process.env.GABBER_API_KEY} } }); return response.data; }

This uses a random UUID to generate a user token, but ideally you'd use the user's email or something. I also set some initial constraints on the user's usage.

2. Setting Up Everything Else

Gabber's SDK has a few things that make this whole thing trivial. For starters, let's import the API Provider and wrap our app in it:

"use client" import React, { useState } from 'react'; import { ApiProvider } from "gabber-client-react" function App(props: { usageToken: string }) { return ( <ApiProvider usageToken={props.usageToken}> {props.children} </ApiProvider> ); } export default App;

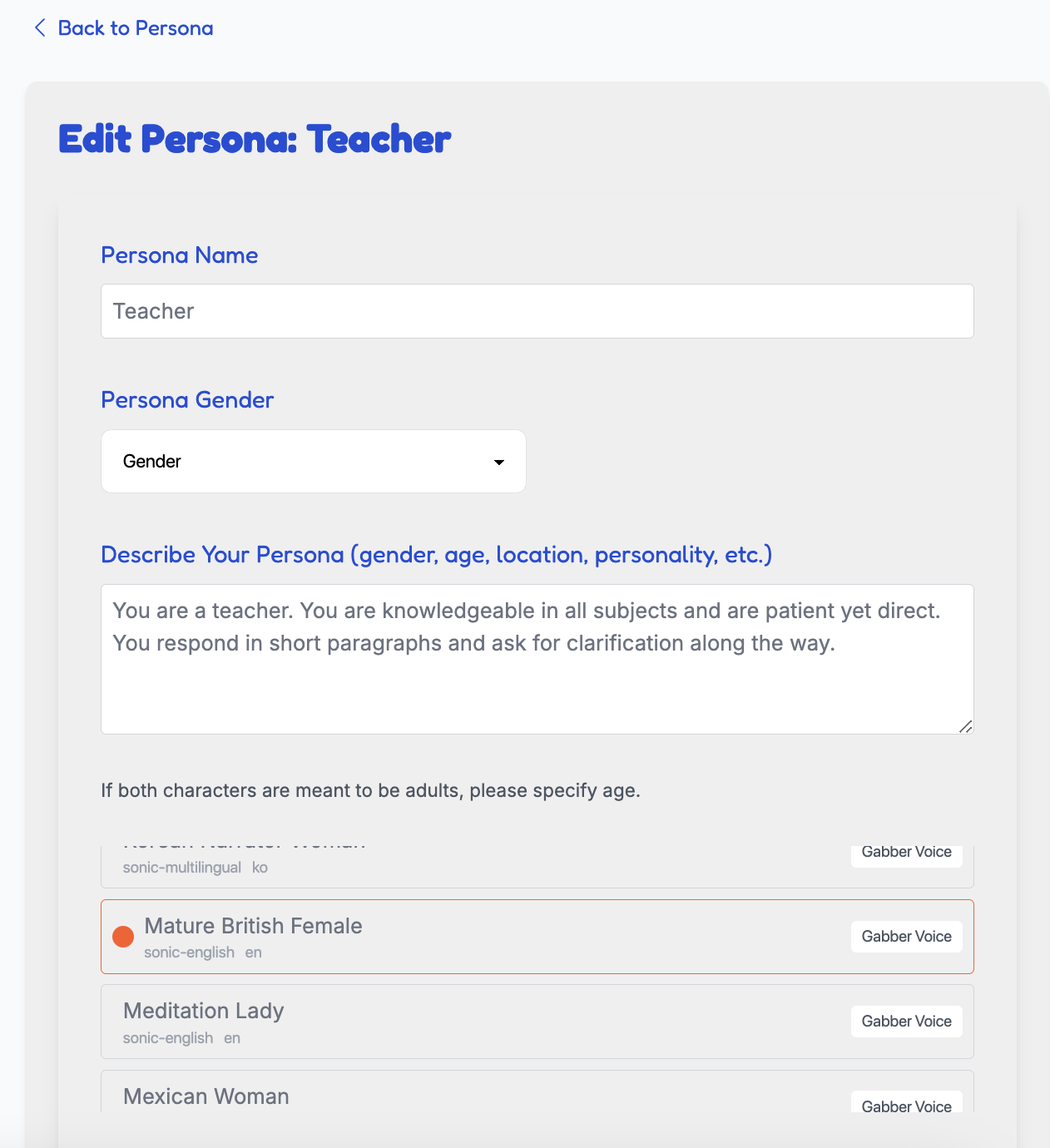

When you weren't looking, I also went into the Gabber project and created a persona for each of our teachers.

From here, you can follow along with the code, but the idea is I'll fetch the personas from our Gabber project and then render them as a dropdown:

import React, { useState, useEffect } from 'react'; import { useApi } from 'gabber-client-react'; export const PersonaSelector = ({ onCreateNew }: { onCreateNew: () => void }) => { const [personas, setPersonas] = useState<Persona[]>([]); const { api } = useApi(); useEffect(() => { const fetchPersonas = async () => { try { setIsLoading(true); const response = await api.persona.listPersonas(); setPersonas(response); } catch (error) { console.error(error); } }; fetchPersonas(); }, []); // ... rest of component };

3. Creating the Session

This is super basic - import Gabber's Session Provider, generate the config (in this case, I created the context from the prompt), and wrap our app in that session provider:

const context = selectedPersona?.description + "You and I are in a one on one lesson. You are teaching me " + selectedSubject + ". My main question is centered around " + question + " so answer it in a way that is easy to understand, and ask if I have questions."; setChatPrompt(context); const contextResponse = await api.llm.createContext({ messages: [ { role: "system", content: context } ] }); <RealtimeSessionEngineProvider connectionOpts={{ token: usageToken, config: { generative: { llm: SFW_LLM, voice_override: voice, context: context }, general: {}, input: { interruptable: true, parallel_listening: true }, output: { stream_transcript: true, speech_synthesis_enabled: true } }, }} > <ApiProvider usageToken={usageToken}> <ChatPageContent /> </ApiProvider> </RealtimeSessionEngineProvider>

Now we can grab Gabber's various realtime conversation tools to piece together our chat experience. With this setup, users can text or talk, and the AI will respond with both speech and text:

const { sendChatMessage, isRecording, setMicrophoneEnabled, microphoneEnabled, startAudio } = useRealtimeSessionEngine();

One thing we could have done is refer back to previous lessons using a combination of tool calling and context.

I show that in action here:

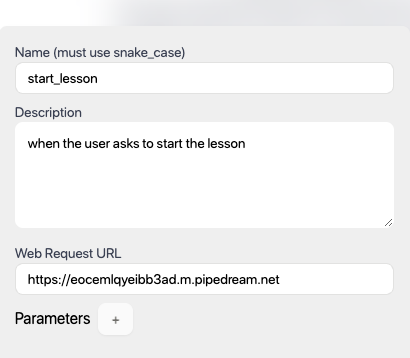

To add this "memory" functionality, I first set up a tool call in the gabber dashboard.

Once done, I create a basic backend endpoint that the tool call can hit. That backend endpoint calls a function that fetches previous conversation, summarizes it, and then generates a "speak" response to the conversation with the summary + a choice to keep the conversation going or start a new topic.

const messageResponse = await axios.get(`https://api.gabber.dev/v1/realtime/0b829d7b-9248-4cd4-bfc1-7805923d3b8c/messages`, { headers: { "Accept": "application/json", "x-api-key": "", }, maxBodyLength: Infinity, }); const formattedMessages = messages.map((msg) => ({ role: msg.role, content: extractTextContent(msg.content), })); // Add system prompt at the beginning formattedMessages.push({ role: "user", content: "I'd like you to succinctly summarize the topic we were discussing prior to this message.", }); formattedMessages.push({ role: "user", content: "I'd like you to succinctly summarize the topic we were discussing prior to this message.", }); // Prepare chat completion payload const chatPayload = { messages: formattedMessages, model: "8fd97e5f-113b-4ed3-85ab-8540278eab55", // Ensure this is the correct model temperature: 0.7, max_tokens: 500, stream: false, }; // Get response from the chat API const chatResponse = await axios.post("https://api.gabber.dev/v1/chat/completions", chatPayload, { headers: { "Content-Type": "application/json", "Accept": "application/json", "x-api-key": "c189d2f4-de2c-407d-8493-b333fd208012", }, maxBodyLength: Infinity, }); const chatResponse = await axios.post("https://api.gabber.dev/v1/chat/completions", chatPayload, { headers: { "Content-Type": "application/json", "Accept": "application/json", "x-api-key": "c189d2f4-de2c-407d-8493-b333fd208012", }, maxBodyLength: Infinity, }); const aiResponse = chatResponse?.data?.choices?.[0]?.message?.content; console.log(aiResponse) if (!aiResponse) { throw new Error("AI response is empty or undefined."); } console.log(`AI Response: ${aiResponse}`); const newResponse = `Great. In the last lesson we got pretty in the weeds. ${aiResponse}. Would you like to pick up where we left off, or discuss something new today?` // Call speak API with the AI's response await speakMessage(session_id, newResponse); console.log("Speak request completed successfully.");

And that's it! Super simple. We generate an AI persona, in this case an AI teacher, create a prompt that allows the user to ask questions, and then we use Gabber's realtime conversation tools to piece together our chat experience. At the end, we even showed how easy it is to add a tool call to the persona to add some "memory" functionality.

If you want to see the full code, you can find it on our Github, and if you want to try Gabber, you can sign up here